On a fine morning you are crossing the road to reach your office on the other side, just when you are half the way through you notice a driverless piece of metal, a robot, advancing towards and you get into a dilemma deciding on to cross the road or not? A strong question presses your mind, “Did the car notice me?” Then you feel relieved when you observe that the speed of vehicle is being automatically slowed down and it makes a way out for you. But hold on what just happened? How did a machine get human level intelligence?

In this article we will try answering these questions by taking a deep look into the sensors used in Self-Driving Cars and how they are getting ready to drive the cars of our future. Before diving into that, let's also catch up with the basics of autonomous vehicles, their driving standards, the major key players, their current development and deployment stage etc. For all this we will be considering self-driving cars because they make a major market share of the autonomous vehicles.

History of Self-Driving Cars

Driverless Self-driving cars initially came out of the science fiction but now they are almost ready to hit the roads. But the technology didn't emerge overnight; experiments on the self-driving cars began in the late 1920s with the cars controlled with the help of the radio waves remotely. However, the promising trial of these cars started to come out in 1950-1960s being straightly funded and supported by the research organizations such as DARPA.

Things started realistic only in the 2000s when the tech-giants such as Google start coming forward for it giving a blow to its rival field companies such as general motors, ford, and others. Google started by developing its self-driving car project now called as Google waymo. The taxi company Uber also come forward with their self-driving car in a row along with its competition with Toyota, BMW, Mercedes Benz and other major players in the market and by the time Tesla driven by Elon Musk also banged the market to make things spicy.

Driving Standards

There is a big difference between the term self-driving car and fully autonomous car. This difference is based on the Level of driving standard that is explained below. These standards are given by the J3016 section of the international engineering and automotive industry association, SAE (Society of Automotive Engineers), and in Europe by the Federal Highway Research Institute. It is a six-level classification from Level zero to Level five. However, level zero implies no automation but complete human control of the vehicle.

Level 1 -Driver Assistance: A low-level assistance of the car such as acceleration control or the steering control but not both simultaneously. Here the major tasks such as steering, breaking, knowing the surrounding are still controlled by the driver.

Level 2 -Partial Automation: At this level car can assist both the steering and acceleration while most of the critical features are still monitored by the driver. This is the most common level we can find in cars that are on the road nowadays.

Level 3 -Conditional Automation: Moving on to level 3 where the car monitors the environmental conditions using sensors and take necessary actions such as braking and rolling on the steering, whereas the human driver is there to intervene the system if any unexpected condition arises.

Level 4 -High Automation: This is a high level of automation in which the car is capable of completing the entire journey without the human input. However, this case comes with its own condition that the driver can switch the car into this mode only when the system detects that the traffic conditions are safe and there is no traffic jam.

Level 5 -Full Automation: This level is for the fully automated cars that don’t exist till date. Engineers are trying to make it happen. This will enable us to reach our destination without a manual control input to steering or brakes.

Various types of Sensors used in Autonomous/Self-Driving Vehicles

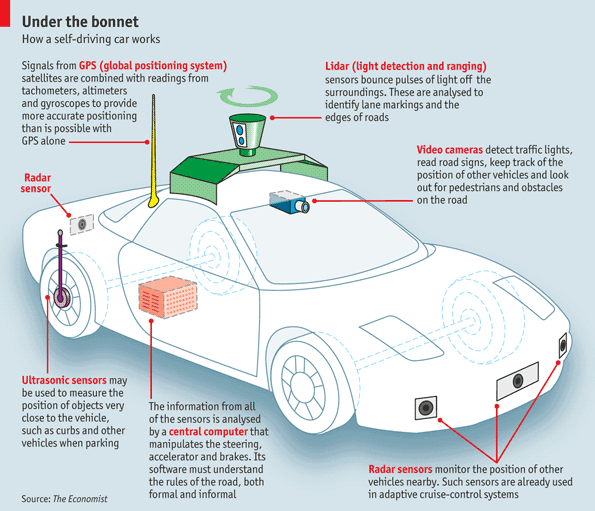

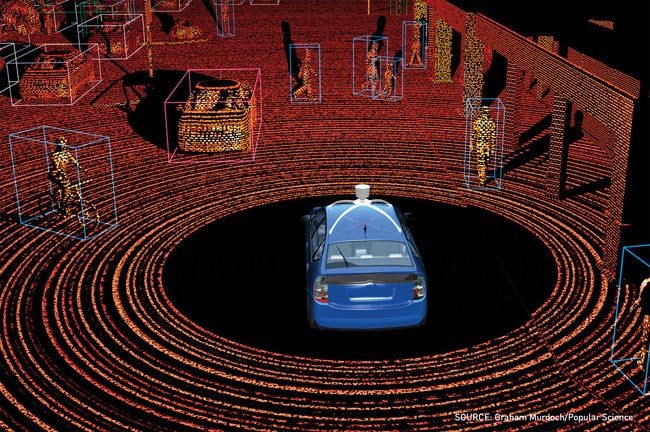

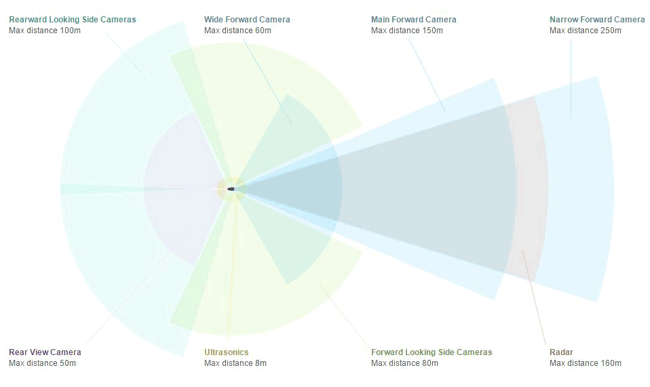

There are various types of sensors used in autonomous vehicles but major of them includes the use of cameras, RADARs, LIDARs and ultrasonic sensors. The position and type of sensors used in Autonomous cars are shown below.

All the above mentioned sensors feed the real time data to Electronic Control Unit also known as Fusion ECU, where data is processed to get the 360-degree information of surrounding environment. The most important sensors which form the heart and soul of self-driving vehicles are the RADAR’s, LIDAR’s and camera sensors, but we can’t ignore the contribution of other sensors such as Ultrasonic sensor, temperature sensors, Lane detection sensors and GPS as well.

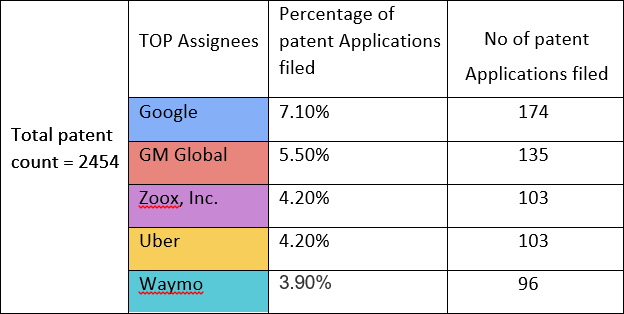

The graph shown below is from the research study conducted on Google Patents focusing on the usage of the sensors in autonomous or self-driving vehicles, the study analysis the number of patent field on each technology (multiple sensors including, Lidar, sonar, radar & cameras for object & obstacle detection, classification & tracking) using basic sensors used in every self-driving vehicle.

The above graph shows the patent filing trends for self-driving vehicles keeping focus on the usage of sensors in it, as it could be interpreted that the development of these vehicles with the help of sensors started around 1970s. Though the development pace was not fast enough, but increasing at a very slow pace. The reasons of this could be numerous like undeveloped factories, undeveloped proper research facilities and laboratories, unavailability of high end computing and of course unavailability of the high speed internet, cloud and edge architectures for the computation and decision making of self-driving vehicles.

In 2007-2010 there was sudden growth of this technology. Because, during this period there was only a single company responsible for it I.e. General motors and in the next years this race was joined by tech giant Google and now various companies are working on this technology.

In the coming years it can be forecasted that a whole new set of companies will be coming into this technology area taking the research further in different ways.

RADARs in Self-Driving Vehicles

Radar plays an important role getting helping the vehicles understand its system, we have already built a simple ultrasonic Arduino Radar system earlier. The Radar technology first found its wide spread use during the World War II, with application of German inventor Christian Huelsmeyer patent 'telemobiloscope’ an early implementation of radar technology that could detect ships up to 3000 m away.

Fast forwarded today, the development of the radar technology has brought many use cases across the world in the military, airplanes, ships and submarines.

How Radar Works?

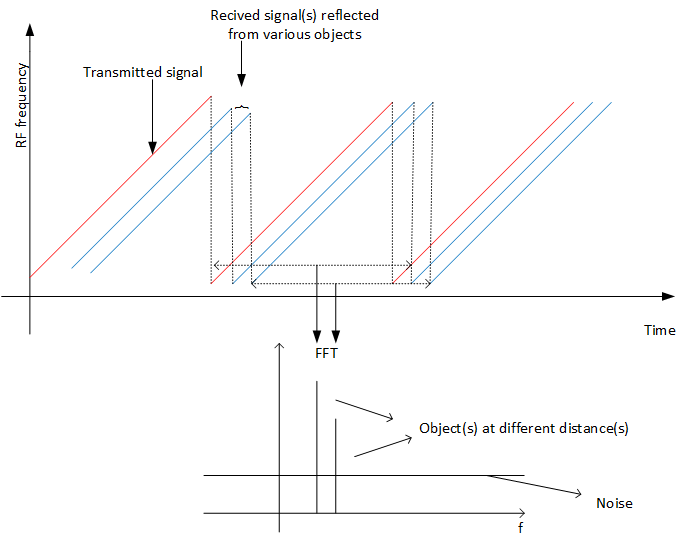

RADAR is an acronym for radio detection and ranging, and pretty much from its name it can be understood that it works on radio waves. A transmitter transmits the radio signals in all directions and if there’s an object or obstacle in the way, these radio waves reflect back to the radar receiver, the difference in transmitter and receiver frequency is proportional to the travel time and can be used to measure the distances and distinguish between different types of objects.

The below image shows the Radar transmission and reception graph, where red line is the transmitted signal and blue lines are the received signals from different object across time. Since we know the time of transmitted and received signal we can perform FFT analysis to calculate the distance of object from the sensor.

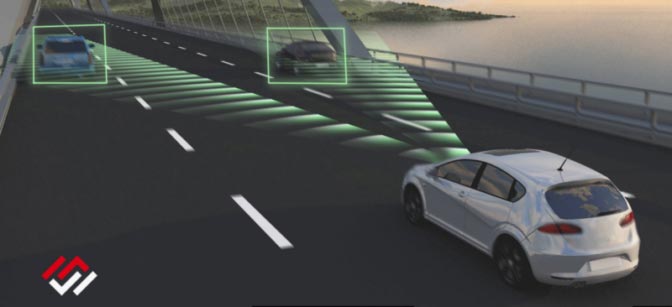

Use of RADAR in Self Driving Cars

RADAR is one of the sensors that ride behind the car’s sheet metal for making it autonomous, it’s a technology that has been in the production of the cars from 20 years till now, and it makes possible for a car to have adaptive cruise control and automatic emergency braking. Unlike the vision systems such as camera’s it can see at night or in bad weather and can predict the distance and speed of object from hundreds of yards.

The downside with RADAR is that, even the highly advanced radars can’t predict their environment clearly. Consider that you are a cyclist standing in front of a car, here Radar can’t predict surely that you are a cyclist but it can identify you as an object or an obstacle and can take necessary actions also it can’t predict the direction in which you are facing it can only detect your speed and moving direction.

To drive like humans, vehicles must first see like humans. Sadly, RADAR is not much detail specific it has to be used in combination with other sensor in autonomous vehicles. Most of the car manufacturing companies such as Google, Uber, Toyota and Waymo rely heavily on another sensor called LiDAR since they are detail specific but their range is of few hundred meters only. This is a sole exception to the autonomous car maker TESLA as they use RADAR as their prime sensor and Musk is confident that they will never need a LiDAR in their systems.

Earlier there was not much development happening with the Radar Technology, but now with their importance in autonomous vehicles. Advancement in RADAR system is being brought up by various Tech companies and startups. The companies that are reinventing the role of RADAR in mobility are listed below

BOSCH

Bosch’s latest version of RADAR is helping to create a local map over which the vehicle can drive. They are using a map layer in combination with RADAR that allows figuring out the location based on GPS and RADAR information similar to creating road signatures.

By adding the inputs from the GPS and RADAR, Bosch’s system can take real time data and compare it to the base map, match the patterns between the two, and determine its locations with high accuracy.

With the help of this technology car can drive themselves in a bad weather conditions without relying much upon cameras and LiDAR’s.

WaveSense

WaveSense is a Boston based RADAR company which believes that self-driving cars do not need to perceive their surrounding as same as humans.

Their RADAR unlike the other systems uses ground-penetrating waves to see through the roads by creating a map of the road surface. Their systems transmit the radio waves 10 feet below the road and gets the signal back which maps the soil type, density, rocks, and infrastructure.

The map is a unique finger print of the road. Cars can compare their position to a preloaded map and localize themselves within 2 centimeters horizontally and 15 centimeters vertically.

The wavesense technology is also not dependent upon weather conditions. Ground penetrating radar is traditionally being used in the archaeology, pipe line work and rescues; wavesense is the first company to use it for the automotive purposes.

Lunewave

Sphere shaped antennas are recognized by the RADAR industry since their advent in 1940 by the German physicist Rudolf Luneburg. They can provide a 360-degree sensing capability, but till now the problem was that they were tough to manufacture in a small size for automotive use.

With the outcome of 3D printing, they could be easily designed. Lunewave is designing 360 degree antennas with the help of 3D printing roughly to the size of a ping-pong ball.

The unique design of antennas allows the RADAR to sense obstacle at a distance of 380 yards which is nearly double that could be achieved by a normal antenna. Further, the sphere permits the sensing capability of 360 degrees from a single unit, rather than 20-degree traditional view. Due to small size it is easier to integrate it in the system, and reduction in the RADAR units decreases the multi-image stitching load over the processor.

LiDars in Self-Driving Vehicles

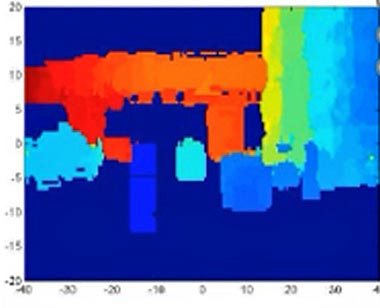

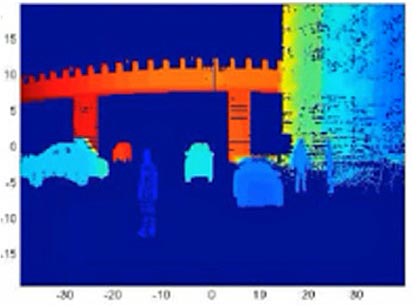

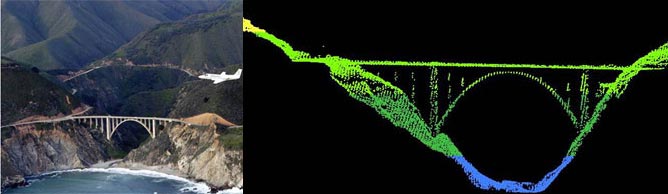

LiDAR stands for Light Detection and Ranging, it’s an imaging technique just like RADAR but instead of using radio waves it uses light (Laser) for imaging the surroundings. It can easily generate a 3D map of surrounding with the help of a point cloud. However, it can’t match the resolution of the camera but still it is clear enough to tell the direction in which an object is facing.

How LiDAR works?

LiDAR can usually be seen on the top of self-driving vehicles as a spinning module. As it spins, it emits light at a high speed 150,000 pulses per second and then it measures time taken for them to return back after hitting the obstacles ahead of it. As the light travels at a high-speed, 300,000 kilometers per second it can measure the distances of the obstacle easily with the help of the formula Distance = (Speed of Light x Time of Flight) / 2 and as the distance of different points in the environment is gathered it is used to form a point cloud which could be interpreted into 3D images. LiDAR usually measures the actual dimensions of the objects, which gives a plus point, if used in automotive vehicles. You can learn more about LiDAR and its working in this article.

Use of LiDar in Cars

Although LiDAR seems to be an implacable imaging technology, it has its own drawbacks like

- High operative cost and tough maintenance

- Ineffective during heavy rain

- Poor imaging at places having high sun angle’s or huge reflections

Beside these drawbacks companies like Waymo are heavily investing in this technology to make it better as they are relying heavily on this technology for their vehicles, even Waymo uses LiDAR’s as their primary sensor for the imaging the environment.

But still there are companies like Tesla which oppose the use of LiDAR’s in their vehicles. Tesla CEO Elon Musk recently made a comment on the use of LiDAR’s “lidar is a fool’s errand and anyone relying on lidar is doomed.” His company Tesla has been able to achieve self-driving without LiDARs, the sensors used in Tesla and its covering range is shown below.

This comes directly against companies like Ford, GM Cruise, Uber and Waymo who think LiDAR is an essential part of the sensor suite, musk Quoted on it as “LiDAR is lame, They’re gonna dump LiDAR, mark my words. That’s my prediction.” Also universities are backing up musk’s decision of dumping LiDAR’s since two inexpensive cameras on either side of a vehicle can detect objects with nearly LiDAR’s accuracy with just fraction of the cost of LiDAR. The cameras placed on either side of a Tesla car is shown in below image.

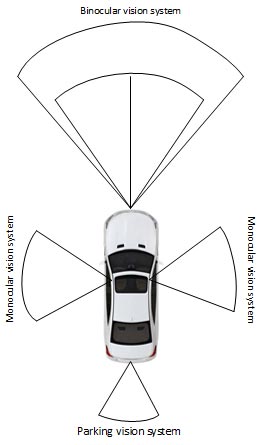

Cameras in Self-Driving Vehicles

All self-driving vehicles use multiple cameras to have a 360-degree view of the surrounding environment. Multiple cameras from each side like front, rear, left and right are used and finally the images are stitched together to have 360-degree view. While, some of the cameras have a wide field of view as much as of 120 degrees and shorter range and the other focuses on more narrow view to provide long range visuals. Some cameras in these vehicles have the fish-eye effect to have a super wide panoramic view. All these cameras are used with some computer vision algorithms which perform all the analytics and detection for the vehicle. You can also check out other image processing related articles that we have covered earlier.

Use of Camera in Cars

Cameras in vehicles are being used for much a long time with an application such as in parking assistance and monitoring the rear of cars. Now as the technology of self-driving vehicle is developing the role of camera in vehicles are being re-thought. While providing a 360-degree surrounding view of the environment, cameras are able to drive the vehicles autonomously through the road.

To have a surround view of the road, cameras are integrated at different locations of the vehicle, in front a wide view camera sensor is used also known as binocular vision system and on the left and right side monocular vision systems are used and at the rear end a parking camera is used. All these camera units bring the images to the control units and it stitches the images to have a surround view.

Other type of sensors in Self-Driving Vehicles

Besides the above three sensors, there are some other type of sensors that are used in self driving vehicles for various purposes such as the lane detection, tire-pressure monitoring, temperature control, exterior lightening control, telematics system, headlight control etc.

The future of self-driving vehicles is exciting and is still under development, in future many companies would be coming forward to run the race, and with this many new laws and standards would be created to have a safe use of this technology.