On average, 2500 songs are uploaded every hour on Spotify, Apple Music, YouTube, and other streaming platforms which makes 60,000 songs a day and a million music tracks every week. Each day, almost 3-4 hours are spent by average American users listening to their favorite songs. But, have you ever wondered about the technology behind these songs and been amazed by hearing different instruments simultaneously?

Each instrument has a distinct sound and seems like coming from different locations, be it guitar, flute, drum, or anything where the sound flows through a couple of wires to your ears. How is that possible?

In the real world, any sound that can be heard is the result of the vibration of our eardrums. These sounds often travel through the air and cause the vibration in the air which reaches our eardrums and makes them move back & forth. High-pitched sounds make the eardrums move back & forth more times per second than low-pitched sounds.

Basic Concepts of Digital Audio

This article is meant to acquaint you with the basic concepts of digital audio and the terminology related to this. If any of the following makes sense to you, then it’s a good place to start with!

Did you want to know:

- What does audio digitization mean?

- What is the process of sampling and digitization of audio signals?

- How are sampling rate, bit-depth, and resolution related to sampling and quantization?

- What are Mono, Stereo and Surround sound in digital audio?

If yes, then let’s delve deep into the basic concepts of digital audio.

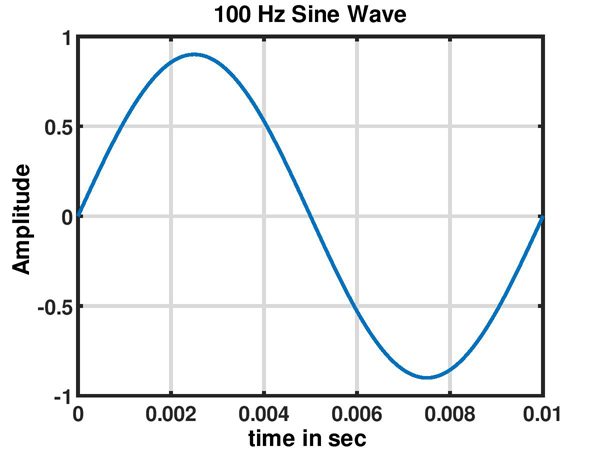

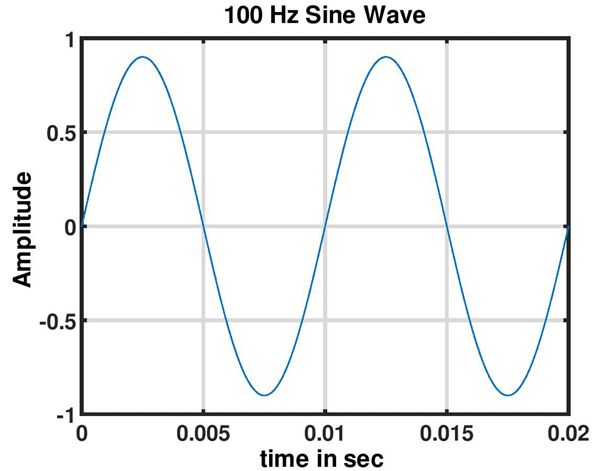

Amplitude & Frequency

Two of the most important aspects of analog audio are amplitude and frequency. Let’s discuss the basic properties of sound waves and explore why different sounds vary from each other.

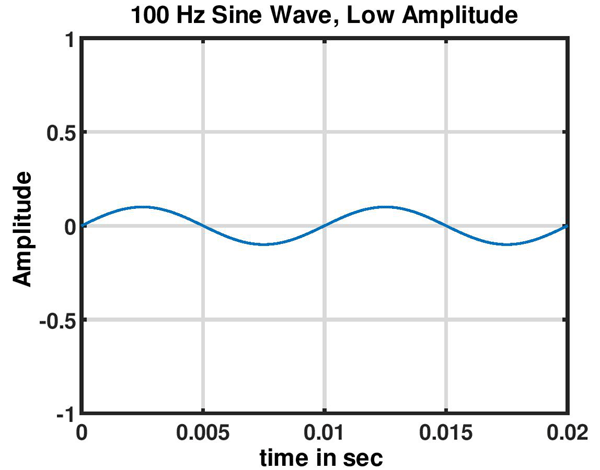

Amplitude: In audio, amplitude refers to the intensity of the contraction & expansion experienced by the medium (mostly air) the sound is traveling through. It is measured in decibels (dB) and perceived by us as the loudness of the pitch. Higher the amplitude, the louder the sound.

Frequency: Frequency is referred to as the number of times vibration is experienced by the medium in one second. It is measured in hertz (Hz) and is also referred to as the pitch. Low-frequency sound travels farther than high-frequency sound. For example, the frequency of drum beat is lower than the frequency of flute.

Fig 1: Low-frequency signal

Fig 2: Low-amplitude signal

A human being can hear the frequency between 20 Hz- 20,000 Hz. Frequencies beyond 20,000 Hz are called ultrasound and frequencies below 20 Hz are called infrasound which is not possible to be heard by humans.

What is “Digital Audio”?

Digital audio is the phenomenon of record, process, store and transmits audio signals through a computer system over the internet. Computers can only understand the signals that have been encoded to binary numbers. But any audio content is inherently analog which our processors can’t interpret.

To represent the audio signals in a way that computers understand and process the data, the data needs to be converted in digital (binary) form.

The process requires various steps. Usually, analog audio signals are found in the form of continuous sinusoidal waves whereas digital audio represents the discrete points that show the amplitude of the waveform. Continuous signals must be converted to discrete signals as they provide finite and countable values after a certain time interval for computer use.

Sampling & Quantization or, Digitization of Audio signals

The conversion process starts with analog to digital conversion (ADC). The process of ADC must accomplish two tasks i.e., sampling and quantization. Sampling stands for the number of samples (amplitude values) captured at regular time intervals. The sampling rate is number of samples taken per second which is measured in Hertz (Hz). If we record 48000 samples per second, the sampling rate would be 48000 Hz or 48 kHz.

Sampling rate (Fs)= 48 kHz

Sampling period (Ts)= 1/Fs

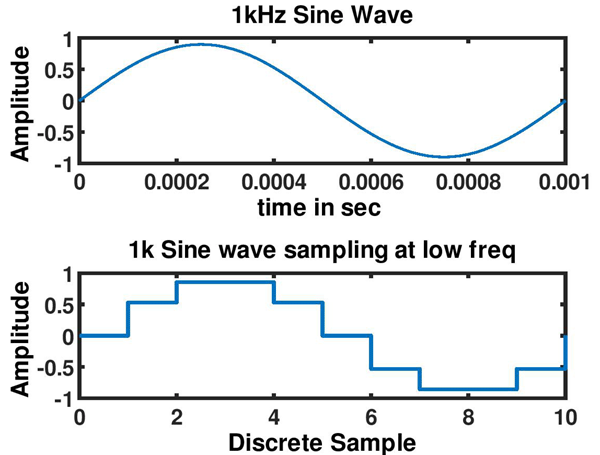

Fig 3: Sampling of audio signals at a lower frequency of 100Hz

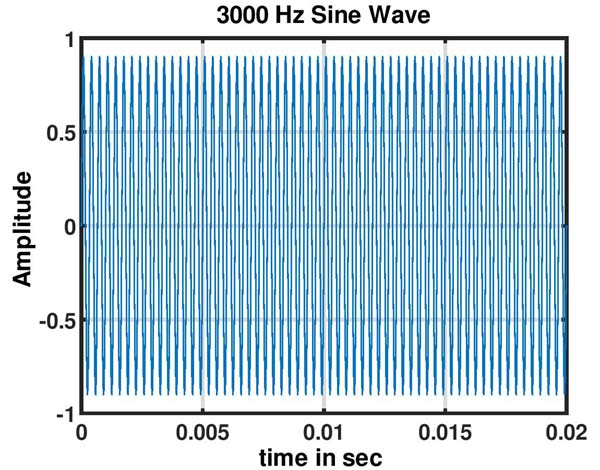

Fig 4: Sampling of audio signals at a higher frequency of 3000Hz

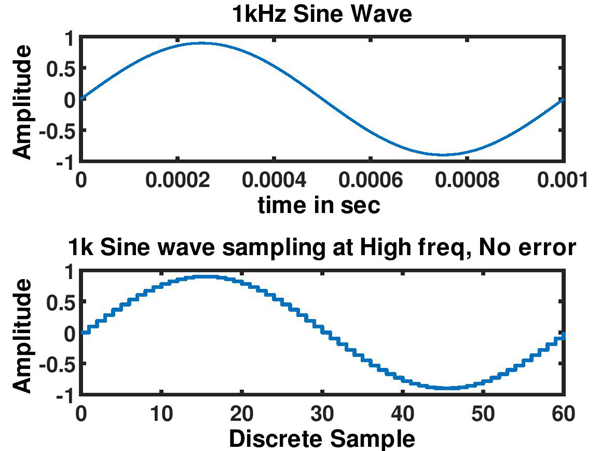

Sampling rate Vs Audio frequency

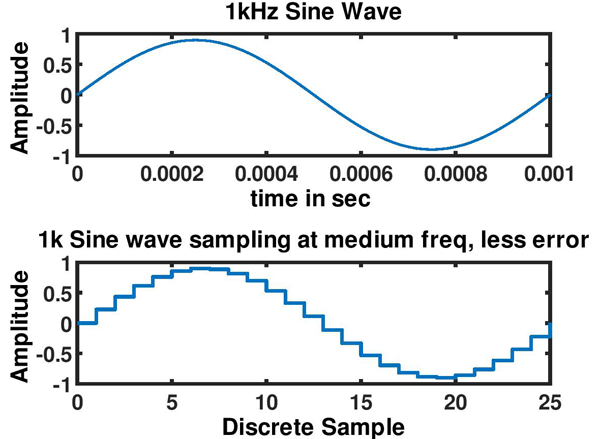

The smaller sampling interval allows a higher sampling rate which results in a higher audio frequency and larger file size and eventually better-quality sound. So, clearly, the sampling rate should be high enough for lossless digitization.

Frequencies that are more than half of sampling rates can’t be represented in digital samples. As per the Nyquist theorem, a continuous-time signal can be perfectly reconstructed from its digital samples when the sampling rate is more than double the highest audio frequency.

Nyquist frequency: Sampling rate should be at least more than double of Fmax.

Fs > 2 Fmax

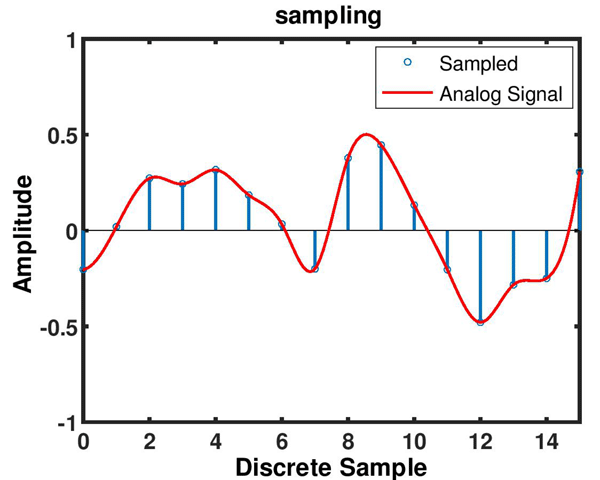

Fig 5: Sampling of audio signals

Aliasing: Aliasing is a type of artifact or distortion that occurs when a signal is sampled at less than twice the highest audio frequency present in the signal. Aliasing often results in the difference between the signals reconstructed from samples and the original continuous signal. It depends on the frequency and sampling rate of the signal. For instance, if a signal is sampled with a 38 kHz sampling rate, any frequency components above 19kHz create aliasing.

Anti-aliasing Filters: The process of aliasing can be avoided by using low-pass filters or anti-aliasing filters. These filters are applied to the input signals before sampling to restrict the bandwidth of the signal. Anti-aliasing filters remove the components above Nyquist frequency and allow to reconstruct the signals from digital samples without any additional distortion.

Bit-depth: In a nutshell, bit-depth is the number of bits available for each sample. Computers only understand and store the info in binary digits i.e., 1 or 0. These binary digits are called bits. The higher no. of bits determines more information has been stored. Hence, the higher the bit-depth, the more data will be captured to get a more precise result.

Dynamic range: Bit-depth also determines the dynamic range of the signal. When the sampled signals are quantized to the nearest value within a given range, these values within this range are determined by bit-depth. These dynamic ranges are represented in decibels (dB). In digital audio, 24-bit audio has the maximum dynamic range of 144dB, whereas 16-bit audio has a maximum of 96dB of dynamic range.

The bit-depth of 16-bit digital audio with a sampling rate of 44.1 kHz is widely used for consumer audio, whereas 24-bit audio with a sampling rate of 48 kHz is used for professional audio for content recording, mixing, storing and editing.

Quantization of Audio Signals

It is the process of mapping analog audio signals with infinite values from a large dataset to digital audio signals with finite and countable values in smaller data set during analog-to-digital conversion (ADC). Bit-depth plays an important role in determining the accuracy and quality of the quantized value. If an audio signal uses 16-bit then the maximum number of amplitude values represented are 2^16= 65,536 values.

It shows that the amplitude of the signal is divided into 65,536 samples and the amplitude of all samples will be assigned a discrete value from a range of possible values. During this process, a small perceivable loss in audio quality may occur, but that is not usually understood by human ears. This loss is due to the difference between the input value and quantized value and is described as quantization error.

(i)

(ii)

(iii)

Fig 6: Relation b/w sampling at different frequencies & quantization error

Mono, Stereo and Surround Sound in Digital Audio

Mono (monophonic) sound is the system where all the sounds are combined and passed through a single channel. It uses only one channel while converting the signal into sound. Even if there are multiple speakers and sound is coming through different speakers, it gives an effect of sound coming from one speaker or single source.

Stereo (stereophonic) sound is the contrary of mono sound. It uses two independent channels (left & right) that give an effect of sounds coming from different directions depending on the speaker you send the signal to. It provides an illusional multi-dimensional audio feel to the listeners and uniform coverage to both left & right channels.

Stereo sound has started replacing the mono sound due to better audio quality and more channels.

Surround sound enriches the fidelity of audio reproduction for listeners by using multiple channels. It makes audiences experience the sound coming from 3 or more directions. Apart from left, right, and center, surround sounds can be heard from front and back that provides the sensation of sound coming from all directions to the listeners. It is widely used in audio systems like home theater designed by Dolby & DTS available in 5.1 & 7.1 channels.

Conclusion

Digital audio converts analog signals into the discrete (binary) form where they can be stored and manipulated on a computer system. Digital audio systems are nowadays all around, be it phones, music systems, computers, home audio systems, conferencing devices, or any other smart devices. It brings many benefits beyond traditional recording and playing songs using analog music systems. Along with various personalization features, digital audio provides high-quality audio, reliability, more storage space, wireless connectivity, portability, and a truly immersive experience to the users.

Various new technologies are being introduced into it like 3D audio effects, surround sound, Dolby sound like Dolby Atmos, Dolby Digital, DTS: Virtual X to give a surreal experience to the listeners. Disruptive technologies like Artificial intelligence and machine learning are providing a more personalized experience to the users. The possibilities are endless!

Thanks for showing us the main benefit of digital audio. I have experienced both traditional and digital audio so I am able to see the difference. The main differences are improved audio quality, ease of use, countless applications, countless control methods and affordability too. Today, any device you use such as TV, projector, computer, gaming machines and others all have digital audio system.