In the last few years, there is rapid growth in Augmented Reality and Virtual reality. These technologies are helping the world to understand complex things by making the visualization easier and effective. They make it easy to visualize the object in 3 dimensions which not only creates a virtual image of imaginary objects but also builds 3D images of real objects.

The first experiment of virtual reality in humankind is done by Sutherland in 1968. He made a huge mechanically mounted head display which was very heavy and it named as “Sword of Damocles”. The sketch for the same is given below.

The term “Augmented Reality” was coined by two Boeing researchers in 1992. They want to analyze aircraft’s parts without disassembling them.

Google has already launched its ARCore which helps in making AR content on smartphones. Many smartphones support ARcore and you just need to download the AR app and can experience it without any other requirements. You can find the list of AR supported smartphones here.

Let’s dive into the world of AR and VR by understanding these technologies and differences between them.

What is Augmented Reality and how its different from Virtual reality?

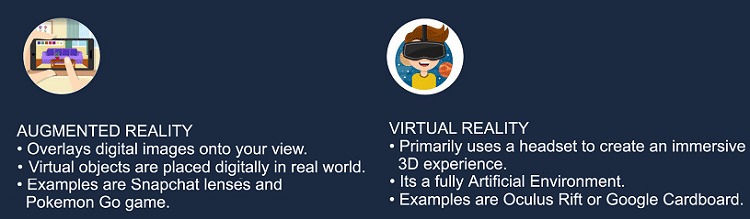

Augmented Reality is the direct or indirect live view of the real physical world in which computer-generated objects are placed using image processing. Word “Augment” means to make things large by adding other things. AR brings computing into the real world, letting you interact with digital objects and information in your environment.

In virtual reality, a simulated environment is created in which the user is placed inside the experience. So, VR transports you to a new experience and therefore you don’t need to get there to see a place, you feel what it’s like to be there. Oculus Rift or Google Cardboard are some examples of VR.

Mixed reality is the combination of both AR and VR in which you can create a virtual environment and augment other objects into it.

You can see the difference between these technologies just by observing the above image and definitions.

The most important difference lies in the hardware itself. For experiencing VR, you need some kind of headset that can be powered through a smartphone or connected through a high-end PC. These headsets require power displays with low-latency so that we can observe the virtual world smoothly without dropping a single frame. While AR technology doesn’t require any headset, you can just use a phone camera and hold it towards specified objects to experience headset free AR at any time.

Apart from using a smartphone for AR, you can use standalone smart glasses like Microsoft Hololens. Hololens is a high-performance smart glass that has different types of sensors and cameras embedded into it. It is specially designed for experiencing AR.

Use cases of Augmented Reality

Though AR is a young medium and it is already being used in a variety of different sectors. In this section, we'll look at a few of the most popular use cases of AR.

1. AR for shopping and retail: This sector using AR technology very extensively. AR let you try to watch, clothes, makeup, spectacles, etc. Lenskart, an online platform for buying spectacles uses AR to give you a feel of the real look. Furniture is also the best use case of AR. You can point the camera on any part of your house/office for which you want to buy furniture, it will show the best possible view in 3-D with exact dimensions.

2. AR for Business: Professional organizations also using AR which enables the interaction with the products and services. Retailers can give customers novel ways to engage with products, and advertisers can reach consumers with immersive campaigns. Warehouses can build helpful navigations and instructions for workers. Architecture firms can display designs in 3D space.

3. AR for Social media: Many social media platforms like Snapchat, Facebook are using AR to put different types of filters. AR manipulates your faces digitally and makes your photos more interesting and funny.

4. AR in gaming: In 2016, Pokemon Go becomes the first viral AR game. It was so interesting and real that People got addicted to this game. Now, many gaming firms using AR to make the characters more engaging and interactive with the user.

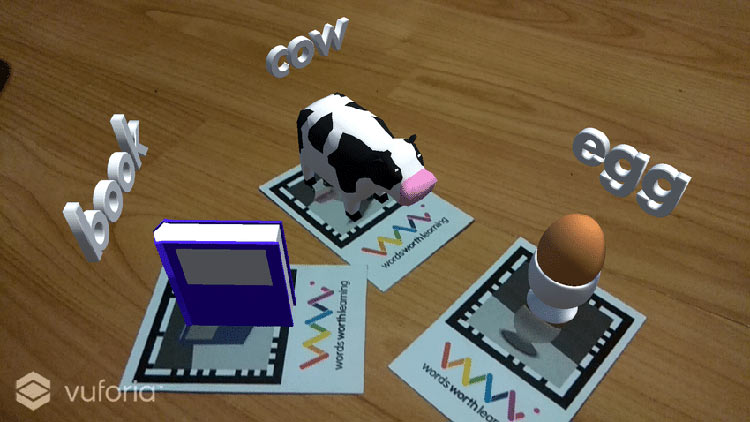

5. AR in Education: Teaching complex topics with the help of AR is one of its capabilities. Google launched an AR application for education named Expeditions AR, which is designed to help teachers show students with the help of AR visuals. An AR visual give below which shows how volcano eruption takes place.

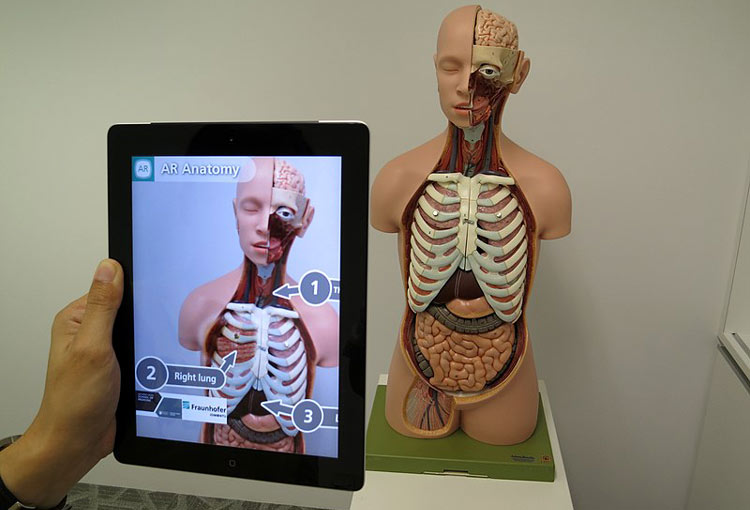

6. AR for Healthcare: AR is used in hospitals to help doctors and nurses in planning and executing surgeries. Interactive 3-D visuals like in AR offers much more for these doctors in comparison to 2-D. Therefore, AR can guide surgeons through complex operations one step at a time and it could replace traditional charts in the future.

7. AR for non-profits: AR can be used by Nonprofit organizations to encourage deeper engagement around critical issues and help build brand identity. For example, an organization wants to spread awareness about global warming, then they can give a presentation about its impacts using AR interactive objects to educate people.

Hardware Requirements for Augmented Reality

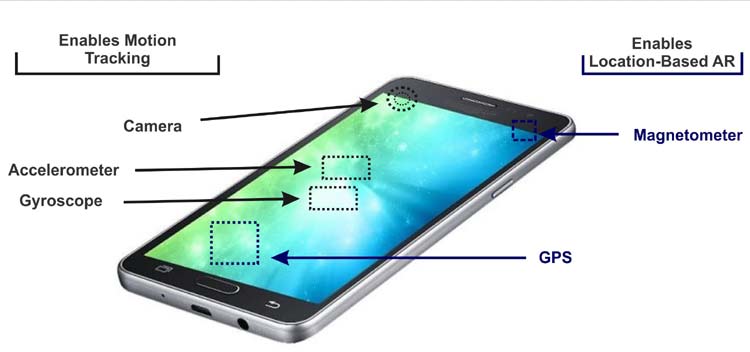

The base for any technology starts with its hardware. As described above that we can experience AR on the smartphone or standalone smart glasses. These devices contain many different sensors through which the user’s surrounding environment can be tracked.

Sensors like Accelerometer, Gyroscope, Magnetometer, Camera, Light detection, etc. play a very important role in AR. Let’s see the importance and roles of these sensors in AR.

Motion Tracking Sensors in Augmented Reality

- Accelerometer: This sensor measure Acceleration which can be static like Gravity or it can be Dynamic like Vibrations. In other words, it measures the change in speed per unit time. This sensor helps the AR device in tracking the change in motion.

- Gyroscope: Gyroscope measures the angular velocity or orientation/inclination of the device. So when you tilt your AR device, then it measures the amount of inclination and feeds it to the ARCore to make the AR objects respond accordingly.

- Camera: It gives the live feed of the user’s surrounding environment on which AR objects can be overlaid. Apart from the camera itself, the ARcore uses other technologies like Machine learning, complex image processing to produce high-quality images and mapping with the AR.

Let’s understand motion tracking in detail.

Motion Tracking in Augmented Reality

AR platforms should sense the movement of the user. For this, these platforms use Simultaneous Localization and Mapping (SLAM) and Concurrent Odometry and Mapping (COM) technologies. SLAM is the process by which robots and smartphones understand and analyze the surrounding world and act accordingly. This process uses depth sensors, cameras, accelerometers, gyroscope and light sensors.

Concurrent Odometry and Mapping (COM) might sound complex but basically, this technology help smartphones in locating itself in space with relation to the world around it. It captures visually distinct objects features in the environment called the feature points. These feature points can be a light switch, edge of the table, etc. Any high-contrast visual is conserved as a feature point.

Location Tracking Sensors in AR

- Magnetometer: This sensor is used to measure the earth's magnetic field. It gives the AR device a simple orientation related to the Earth's magnetic field. This sensor helps the smartphone to find a particular direction, which allows it to auto-rotate digital maps depending on your physical orientation. This device is the key to location-based AR apps. The most commonly used magnet sensor is a Hall sensor, using which we have previously built a virtual reality environment using Arduino.

- GPS: It is a global navigation satellite system that provides geolocation and time information to a GPS receiver, like in a smartphone. For ARCore-capable smartphones, this device helps enable location-based AR apps.

What makes AR feel real?

There are many tools and techniques which are used to make the AR feel real and interactive.

1. Placing and positioning Assets: Assets are the AR objects which are visible to eyes. To maintain the illusion of reality in AR, digital objects need to behave in the same way as the real ones. These objects need to be stick to a fixed point in a given environment. Fixed point can be something concrete like the floor, table, wall, etc. or it can be in the mid-air. It means during the motion, assets should not be jumped randomly, it should be fixed at predefined points.

2. Scale and size of assets: AR objects need to be able to scale. For example, if you see a car coming towards you, it starts from small and gets bigger as it approaches. Also, if you see a painting from the side, it looks different when it is seen from the front. So, AR objects also behave like same way and give feel like real objects.

3. Occlusion: What happens when an image or object is blocked by another- is referred to as Occlusion. So, when you move your hand in front of your eyes, you will be concerned if you see anything while your eyes are blocked by a hand. Also, AR objects should follow the same rule, when an AR object is hiding other AR object, then only the AR object which is in front should be visible by occluding the other one.

4. Lighting for increased realism: When there is a change in the lighting of the surrounding, then the AR object needs to respond to this change. For example, if the door is opened or closed, the AR object should change the color, shadow, and appearance. Also, the shadow should move accordingly to make the AR feel real.

Tools to create Augmented Reality

There are some online platforms and dedicated software to make AR content. As Google has its own ARCore, they are providing good support to a beginner to make AR. Other than that, few other AR software are briefly explained below:

Poly is an online library by Google where people can browse, share, and remix 3D assets. An asset is a 3D model or scene created using Tilt Brush, Blocks, or any 3D program that produces a file that can be uploaded to Poly. Many assets are licensed under the CC BY license, which means developers can use them in their apps, free of charge, as long as the creator is given credit.

Tilt Brush lets you paint in 3D space with virtual reality. Unleash your creativity with three-dimensional brush strokes, stars, light, and even fire. Your room is your canvas. Your palette is your imagination. The possibilities are endless.

Blocks help in creating 3D objects in virtual reality, no matter your modeling experience. Using six simple tools, you can bring your applications to life.

Unity is a cross-platform game engine developed by Unity Technologies, which is primarily used to develop both three-dimensional and two-dimensional video games and simulations for computers, consoles, and mobile devices. Unity has become a popular game engine for creating VR and AR content.

Sceneform is a 3D framework, with a physically-based renderer, that's optimized for mobile, and that makes it easy for Java developers to build augmented reality.

Important terms used in AR and VR

- Anchors: It is a user-defined point of interest upon which AR objects are placed. Anchors are created and updated relative to geometry (planes, points, etc.)

- Asset: It refers to a 3D model.

- Design Document: A guide for your AR experience that contains all of the 3D assets, sounds, and other design ideas for your team to implement.

- Environmental understanding: Understanding the real-world environment by detecting feature points and planes and using them as reference points to map the environment. Also referred to as context-awareness.

- Feature Points: These are visually distinct features in your environment, like the edge of a chair, a light switch on a wall, the corner of a rug, or anything else that is likely to stay visible and consistently placed in your environment.

- Hit-testing: It is used to take an (x,y) coordinates corresponding to the phone's screen (provided by a tap or whatever other interaction you want your app to support) and project a ray into the camera's view of the world. This allows users to select or otherwise interact with objects in the environment.

- Immersion: The sense that digital objects belong in the real world. Breaking immersion means that the sense of realism has been broken; in AR, this is usually by an object behaving in a way that does not match our expectations.

- Inside-Out Tracking: When the device has internal cameras and sensors to detect motion and track positioning.

- Outside-In Tracking: When the device uses external cameras or sensors to detect motion and track positioning.

- Plane Finding: The smartphone-specific process by which ARCore determines where horizontal and vertical surfaces are in your environment and use those surfaces to place and orient digital objects

- Raycasting: Projecting a ray to help estimate where the AR object should be placed in order to appear in the real-world surface in a believable way; used during hit testing.

- User Experience (UX): The process and underlying framework of enhancing user flow to create products with high usability and accessibility for end-users.

- User Interface (UI): The visuals of your app and everything that a user interacts with.