The Renesas Electronics Corporation announced the joint development of a deep learning-based object recognition solution for smart cameras used in next-generation advanced driver assistance system applications and cameras for ADAS level 2 and above. This new smart camera solution employs deep learning for object recognition with high precision and low power consumption; it also accelerates the widespread adaptation of ADAS.

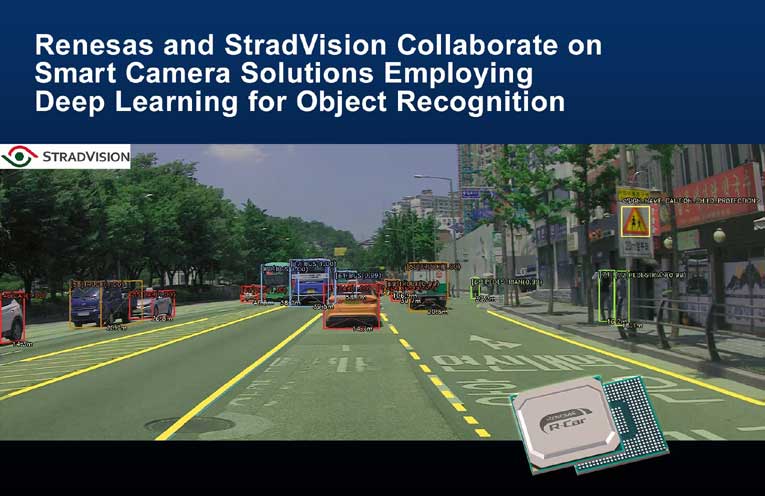

The collaboration between Renesas and StradVision made this new technology capable of recognizing vulnerable road users (VRUs) such as pedestrians and cyclists and also other vehicles and lane markings. The StradVision has optimized their software for the Renesas R-Car automotive system-on-chip (SoC) products R-Car V3H and R-Car V3M which has the track record as the mass-produced vehicles. These R-Car devices have a dedicated engine for deep learning processing called CNN-IP (Convolution Neural Network Intellectual Property), it enables them to run StradVision’s SVNet automotive deep learning network at high speed.

Key Features

1) The solution supports an earlier evaluation of mass production

The StradVision’s SVNet deep learning software is a powerful AI perception solution for mass production of ADAS systems because of its ability to precisely recognize in low light and the ability to deal with occlusion when objects are partially hidden by other objects. The basic software of the R-Car V3H can simultaneously recognize the vehicle, person, and lane by processing the image at a rate of 25 frames per second, which enables swift evaluation and POC development. With the help of these basic capabilities, a developer can customize the software with the addition of signs, markings and other objects as recognition target.

2) R-Car V3H and R-Car V3M SoCs increase reliability for smart camera system while reducing cost

The Renesas R-Car V3H and R-Car V3M feature the IMP-X5 image recognition engine. Combining deep learning-based complex object recognition and highly verifiable image recognition processing with man-made rule allows the designer to build a robust system. The on-chip Image Signal Processor (ISP) can convert the sensor signals for image rendering and recognition processing. So, it is possible to configure a system using inexpensive cameras without a built-in ISP. This made it possible to configure a system using inexpensive cameras, reducing the overall bill of materials (BOM) Cost.

The new joint deep learning solution, including software and development support from StradVision, will be available for the developers by early 2020.